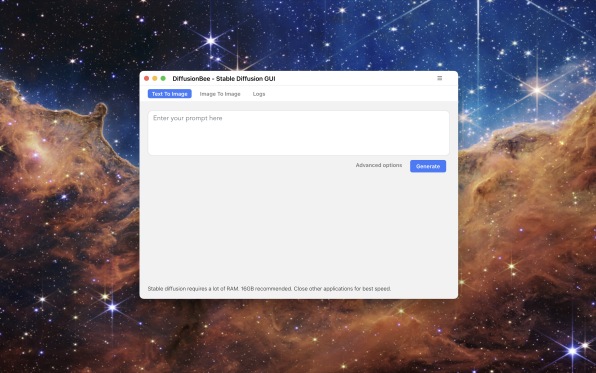

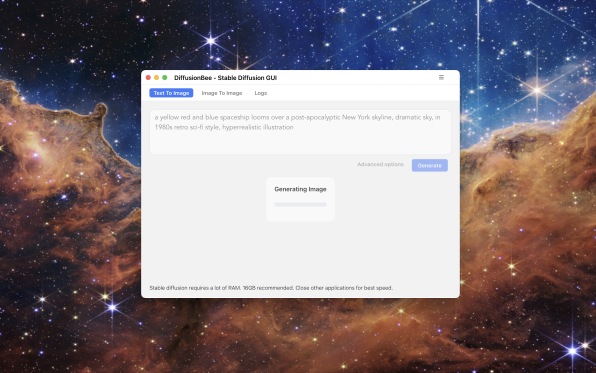

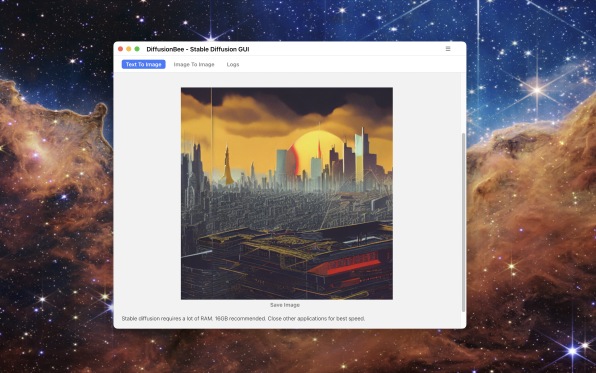

Impossibly realistic and creative art created by AI has been appearing more and more frequently over the past few months, and it used to be accessible to only a select few: now anyone can run a full graphical version of the text-to-image artificial intelligence Stable Diffusion on any Apple Silicon-based Mac—with no technical knowledge whatsoever. You just have to drop an app into your Applications folder, double click on it, write your prompt, and magic happens. It’s called Diffusion Bee, and you can download it here.

Until now, if you wanted to partake in the awesome wonder that is text-to-image AI, you had only two options. Pay to use a service like MidJourney or DALL-E (if you were lucky enough to get an invite), or get into the terminal to install Stable Diffusion, the open source free-to-use tool. While there are step-by-step guides to install all the required components, they often miss little things that make it an exercise of frustration to us mere mortals.

Nobody is closing this Pandora’s Box now

All that has ended, thanks to Divam Gupta, an AI research engineer at Meta. Now, anyone with an Apple Silicon machine can enjoy the power of Stable Diffusion easier than installing Adobe Photoshop. A simple drag-and-drop is all that’s required, instead of having to sign up for Adobe or any other service. It has no fees and doesn’t even require a connection to the internet beyond the initial download of the models needed to make it work. There’s no info uploaded to the cloud. It runs on any Mac with an M1 or M2 processor, taking advantage of the massive processing muscle built into these nimble chips. The developers recommend 16GB of RAM because machine learning is very memory intensive, but I have run it on a MacBook Air M1 with 8GB RAM without a single glitch. It took about five to six minutes to get it (one tip: close all your apps to give Diffusion Bee as much space as possible).

It is a remarkable, albeit expected, development that will for sure cause an explosion of new images and creativity with consequences that, realistically, we can’t yet foresee.

While many illustrators have lambasted these image-to-text tools, most of the time out of fear of losing their jobs, there’s another side to this debate, a growing feeling that this kind of software will be just another tool in the arsenal of creatives worldwide.

A couple of weeks ago, video artist and director Paul Trillo told me that he believed this tool “isn’t going to be taking any jobs away from visual-effects artists.” If anything, he anticipates, “it’s going to create efficiencies to the work they’re already doing. It will open the door to entirely new kinds of techniques as well as allow for lower-budget projects to have photorealistic VFX.”

A renaissance

U.K.-based art director and AR/XR/3D artist Josephine Miller echoed these feelings, telling me that the technology was enabling her to do more things. “Sometimes I feed my designs to DALL-E, which produces variations of it,” she describes, “and then I discover something unexpected that I didn’t think about, which takes me into a new creative direction.” Miller—who has been working with a team of artists and developers on an AR filter that will allow Instagram users to see expanded paintings—also says that she has used it to present variations of her work to clients. “I tell them this is my design, but these others were created by the AI for you to see,” Miller says, “sometimes they find something they like in a variation that is incorporated into the final design.”

Manuel “Manu.Vision” Sainsily—an artist & XR design manager at Unity—also believes that these tools are extremely powerful for creative people. They are also inevitable, he tells me, and they open a path for imaginative people with no visual execution skills to create something visual. “It can empower people who have no power,” he says. Miller agrees, pointing out a very particular case in which disabled kids were, all of the sudden, just using their words, able to create images using DALL-E, while they weren’t able to do it before, because they couldn’t even draw. “It was rather magical,” she says.

Sainsily believes that this technology will lead to a renaissance, much like we have experienced before with other technical revolutions, like digital video editing, desktop publishing, or photography. We have been remixing for centuries. This AI technology just makes the process faster. And yes, that will bring a lot of change to the industry but, as with other revolutions, it will also offer incredible opportunities.

Regardless of your views on having a tool like Stable Diffusion readily available as a one-click app, the fact is, this creative moment is inevitable. While there will be laws and lawsuits trying to curb the use of sampling—as happened with music—it’s going to become increasingly difficult to enforce any control. Even less so when you consider that, right now, visual artists process bits and pieces of other people’s work to create everything, from storyboards to full artwork, using Photoshop and other tools, without crediting the original samples. It’s hard to blame AI for reusing other people’s work when the practice is already common across many visual industries. “People already remix regularly to create new things. AI just makes it faster,” Sainsily points out.

At the end, it feels like any regulation will fall flat, based on current industry practices and the very nature of the AI tools. And it’s only going to get more sophisticated with time, eventually becoming truly synthetic and erasing any trace of the original work. We may as well download this early version of the technology and start working with it to our advantage.

Techyrack Website stock market day trading and youtube monetization and adsense Approval

Adsense Arbitrage website traffic Get Adsense Approval Google Adsense Earnings Traffic Arbitrage YouTube Monetization YouTube Monetization, Watchtime and Subscribers Ready Monetized Autoblog

from Video Editing – My Blog https://ift.tt/oAU1q62

via IFTTT